What HTTP headers googlebot is requesting?

I decided to write a blog post about googlebot's HTTP headers because as far as I know in SEO community, it is a topic which has not been attracted much attention so far. To put it another way, it may be my mistake but I don't remember having seen some blog posts, articles mentioning what HTTP headers googlebot is requesting in SEO blogs.

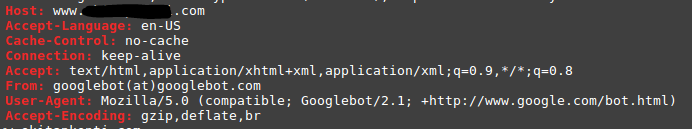

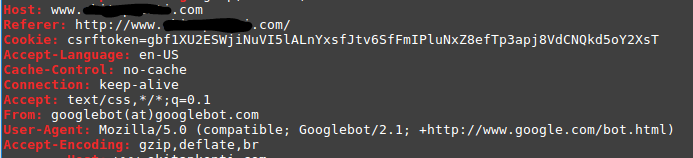

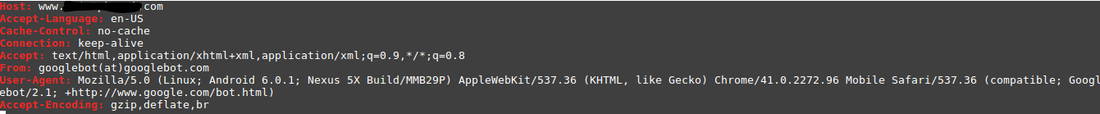

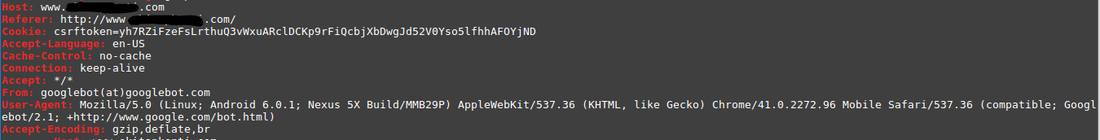

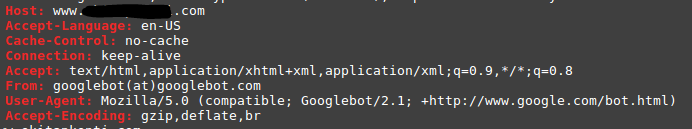

Please find below some of the HTTP headers googlebot is requesting from an HTTP server from Google Search Console. The screenshots are taken after sending Fetch or Fetch & Render Requests from Google Search Console by selecting Desktop or Mobile: Smartphone as User-Agent.

Please note that, the servers of your sites may not receive the same HTTP headers from googlebot. For example, in below screenshots we see Accept-Language HTTP Header set as en-US because the requested server sends Vary: Accept-Language HTTP header to the client which you may not send from your server.

Googlebot HTTP Headers: Fetch with User-Agent Desktop

Googlebot HTTP Headers: Fetch & Render with User-Agent Desktop

Googlebot HTTP Headers: Fetch with User-Agent Mobile: Smartphone

Googlebot HTTP Headers: Fetch & Render with User-Agent Mobile: Smartphone

Please find below some of the HTTP headers googlebot is sending to an HTTP server meaning actual googlebot crawls with the server responses to requests.

Googlebot HTTP Headers: Request Robots.txt file with GET method

Googlebot HTTP Headers: If-None-Match HTTP request header used in combination with If-Modified-Since HTTP request header and the server response with 304 status code.

Googlebot HTTP Headers: Request a CSS file with GET method

Why knowing what HTTP Headers a crawler requests is important?

It is important in the sense that when you say to your clients, you will crawl their sites as googlebot crawls then you should be sure of requesting the same HTTP headers as googlebot from their servers. The response information and later data you collect from a server depend on what you request in your crawlers HTTP headers.

Imagine a server which supports brotli and your crawler requestsAccept-Encoding: gzip,deflate but not Accept-Encoding: gzip,deflate,br.It is not known beforehand how the server will respond in that case, not receiving br in Accept-Encoding HTTP header request, since it is very unlikely but it can even respond with 406 Not Acceptable.But hopefully at the end you would not say to your client that there are crawl performances problems on their site cause it is your crawler which doesn't support brotli and this is neither your client's nor the server's fault.

Another scenario can be; a web page which returns different content based on the perceived country or preferred language of the visitor, in other words the web page returns different content in different languages. Google call this as locale-adaptive pages. Actually it is the case of my blog http://www.searchdatalogy.com 3 different contents in 3 different languages; English, French and Turkish on the same URL can be served from it. If you send Accept-Language HTTP header in French in your crawler's HTTP headers to a website like mine, your crawl will be completely different than googlebot's crawl in most cases. Yes, sure googlebot can set an Accept-Language header in other languages than English when it does local aware crawling but most of the time the language it prefers to speak is its own language English. You can find more information about googlebot's local aware crawling here https://support.google.com/webmasters/answer/6144055?hl=en . Now, you may be thinking who in the world will request a page in French language from their crawler, this might not be an SEO scenario but a movie scenario. Well, if you were a French citizen and coincidentally, you and your friends have a very bizarre hobby which is to develop and test crawlers then believe me it is very likely that you finish crawling a site like mine in French one day or other.

Accordingly if you were the client; when you would like to hire an SEO consultant, work with an SEO agency, or in case you were the SEO consultant using a crawler in the market as a service to realize your SEO contract, you should ask the SEO consultant, the crawl service provider what HTTP headers their crawlers will be requesting while crawling your websites. Finally as a client, you should monitor their crawlers HTTP headers which are being sent to your servers while they are crawling your sites to see if your servers are receiving the promised and correct HTTP headers from their crawlers.

Thanks for taking time to read this post. I offer consulting, architecture and hands-on development services in web/digital to clients in Europe & North America. If you'd like to discuss how my offerings can help your business please contact me via LinkedIn

Have comments, questions or feedback about this article? Please do share them with us here.

If you like this article

Comments